Verifiable Artificial Intelligence

AI Training and Inference Problem

The rapid development of AI has led to monumental milestones, transforming countless industries worldwide. However, this progress has also produced powerful yet opaque “black box” systems. The training and inference processes of these models often lack transparency, making it difficult to audit how they arrive at a specific decision. This opacity creates a fundamental lack of accountability; when an AI makes a critical error, determining the cause or assigning responsibility is a significant challenge.

This lack of transparency is particularly evident in the field of generative AI, which has faced major backlash for producing content that is strikingly similar to copyrighted material. The core issue is one of provenance: because the datasets used for training are often not disclosed, it’s impossible to verify whether a model was trained on protected works. This creates significant legal risks and undermines the trust of artists, writers, and other content creators.

Furthermore, ensuring fairness is a critical problem. AI models can exhibit harmful biases, especially in sensitive applications like banking or hiring. Without a verifiable process, it’s nearly impossible to distinguish between bias that is inherent in the training data and bias that has been maliciously induced through a data poisoning attack. This inability to audit the model’s integrity makes it difficult to trust its outputs for important decisions.

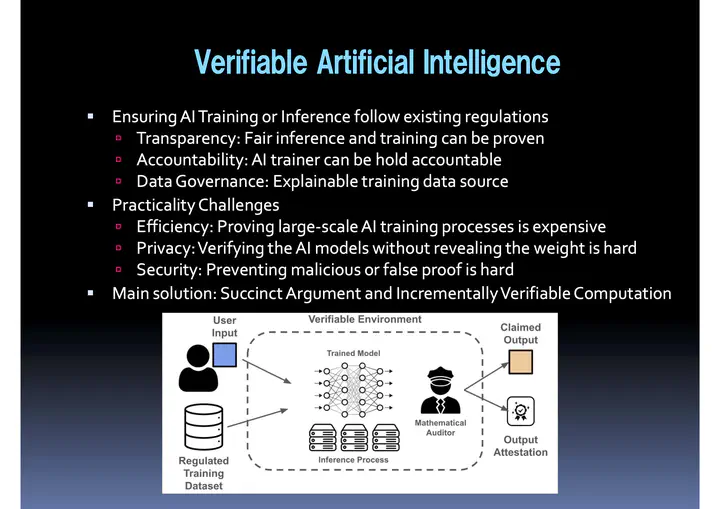

Verifiable AI for Transparent and Accountable AI

Research in Computational Theory and Applied Cryptography is increasingly focused on addressing these trust-related tensions in AI. For example, a Zero-Knowledge Proof of Training is a cryptographic method designed to verify an entire training process, ensuring it is tied to an approved dataset. By requiring such proof, complex issues like copyright claims can be audited and potentially resolved cryptographically.

However, verifying the entire training process for models with billions of parameters is a computationally immense task. Mitigating this requires multidisciplinary collaboration to find elegant solutions for scaling verifiable AI.

In our laboratory, we investigate multiple literature and apply our knowledge from multiple disciplinary to tackle transparency, accountability, and data governance problems, especially by applying techniques called Succinct Argument and Incrementally Verifiable Computation.